A New Perspective on the US-China AI Race: 2025 Ollama Deployment Comparison and Global AI Model Trend Insights

Ollama is a popular open-source tool designed to simplify the process of running, creating, and sharing large language models (LLMs) locally. It bundles model weights, configuration, and data into a package defined by a Modelfile, and provides an API for interacting with these models. This enables developers and researchers to easily deploy and experiment with various advanced AI models on personal computers or servers.

1. Introduction

Ollama is a popular open-source tool designed to simplify the process of running, creating, and sharing large language models (LLMs) locally. It bundles model weights, configuration, and data into a package defined by a Modelfile, and provides an API for interacting with these models. This enables developers and researchers to easily deploy and experiment with various advanced AI models on personal computers or servers.

This report aims to reveal deployment trends, model preferences, geographical distribution, and network characteristics by analyzing data from 174,590 Ollama instances deployed globally.

Note: Data statistics in Chapter 5 and Chapter 7 are sourced from all 174,590 instances. Data in Chapter 6 is sourced from accessible instances. For security reasons, we have not listed statistics on Ollama versions.

Data as of: April 24, 2025.

Report Source: Tenthe AI https://tenthe.com

Author: Ryan

2. Executive Summary

This report is based on the analysis of scan data and API probing of publicly available Ollama instances worldwide. Key findings include:

Globally, among approximately 174,590 records initially identified via Fofa (99,412 unique IPs), 41,021 Ollama instances with accessible APIs were successfully probed, distributed across 24,038 unique IP addresses (an accessibility rate of approximately 24.18%).

Geographically, the United States and China are the countries with the highest number of Ollama deployments. Cloud service providers, particularly AWS, Alibaba Cloud, and Tencent Cloud, are the primary hosts for Ollama instances.

Model deployments show diversity, with

llama3,deepseek-r1,mistral, andqwenseries models being widely popular. Among them,llama3:latestanddeepseek-r1:latestare the two most widely deployed model tags.Models with 7B-8B parameters are the top choice for users, while 4-bit quantized models like Q4_K_M and Q4_0 are widely adopted for their good balance between performance and resource consumption.

The default port

11434is the most commonly used, and most instances expose services via the HTTP protocol.

3. Data Sources and Methodology

The data for this report primarily comes from two stages:

Initial Scan: Using network space search engines like Fofa, with the condition

app="Ollama" && is_domain=false, to initially identify potential Ollama instances deployed globally. This stage found 174,590 records, involving 99,412 unique IPs after deduplication.API Verification and Data Enrichment: Probing the

ip:port/api/tagsAPI endpoint for the initially scanned IP addresses to confirm the accessibility of Ollama services and obtain information about the specific AI models deployed. This stage confirmed 41,021 successfully responsive Ollama instances (from 24,038 unique IPs, with data stored in theollamatable).The final data is stored in the

ollamatable.

The analysis in this report is primarily based on data from the ollama table, which contains records of successfully probed APIs and their detailed information, including IP, port, geographical location, and the JSON response (containing the model list), etc.

4. Overall Deployment Statistics

Number of initial records from Fofa scan: 174,590

Number of unique IPs from Fofa initial scan: 99,412

Number of Ollama instances successfully accessing

/api/tags: 41,021 (from records wherestatus = 'success'in theollamatable)Number of corresponding unique IP addresses: 24,038 (from records where

status = 'success'in theollamatable)Ratio of accessible IPs to initially identified IPs: (24038 / 99412) * 100% ≈ 24.18%

This indicates that among all Ollama instances identified via Fofa, approximately a quarter have their /api/tags interface publicly accessible, allowing us to retrieve information about their deployed models.

5. Geographical Distribution Analysis

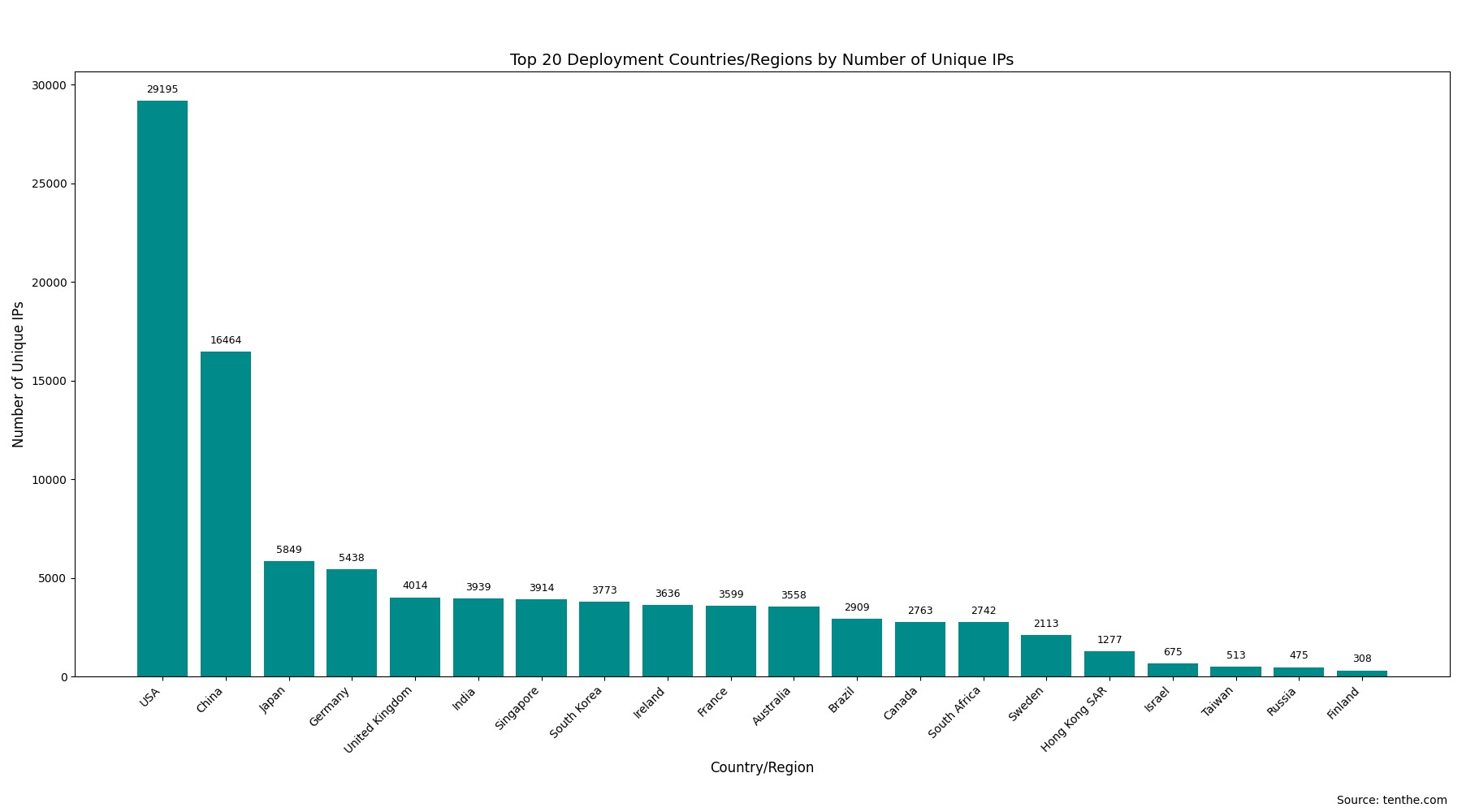

5.1 Top 20 Deployment Countries/Regions

The table below shows the top 20 countries/regions ranked by the number of unique IPs with Ollama instances.

Rank | Country/Region | Unique IP Count |

|---|---|---|

1 | United States | 29195 |

2 | China | 16464 |

3 | Japan | 5849 |

4 | Germany | 5438 |

5 | United Kingdom | 4014 |

6 | India | 3939 |

7 | Singapore | 3914 |

8 | South Korea | 3773 |

9 | Ireland | 3636 |

10 | France | 3599 |

11 | Australia | 3558 |

12 | Brazil | 2909 |

13 | Canada | 2763 |

14 | South Africa | 2742 |

15 | Sweden | 2113 |

16 | Hong Kong SAR, China | 1277 |

17 | Israel | 675 |

18 | Taiwan, China | 513 |

19 | Russia | 475 |

20 | Finland | 308 |

5.2 Top 20 Global City Deployments

The table below shows the top 20 cities worldwide ranked by the number of unique IPs with Ollama instances.

Rank | City | Country/Region | Unique IP Count |

|---|---|---|---|

1 | Ashburn | United States | 5808 |

2 | Portland | United States | 5130 |

3 | Singapore | Singapore | 3914 |

4 | Frankfurt am Main | Germany | 3908 |

5 | Beijing | China | 3906 |

6 | London | United Kingdom | 3685 |

7 | Columbus | United States | 3672 |

8 | Mumbai | India | 3637 |

9 | Dublin | Ireland | 3631 |

10 | Tokyo | Japan | 3620 |

11 | Sydney | Australia | 3487 |

12 | Paris | France | 3175 |

13 | San Jose | United States | 2815 |

14 | Sao Paulo | Brazil | 2753 |

15 | Cape Town | South Africa | 2692 |

16 | Montreal | Canada | 2535 |

17 | Seattle | United States | 2534 |

18 | Hangzhou | China | 2447 |

19 | Seoul | South Korea | 2327 |

20 | Osaka | Japan | 2184 |

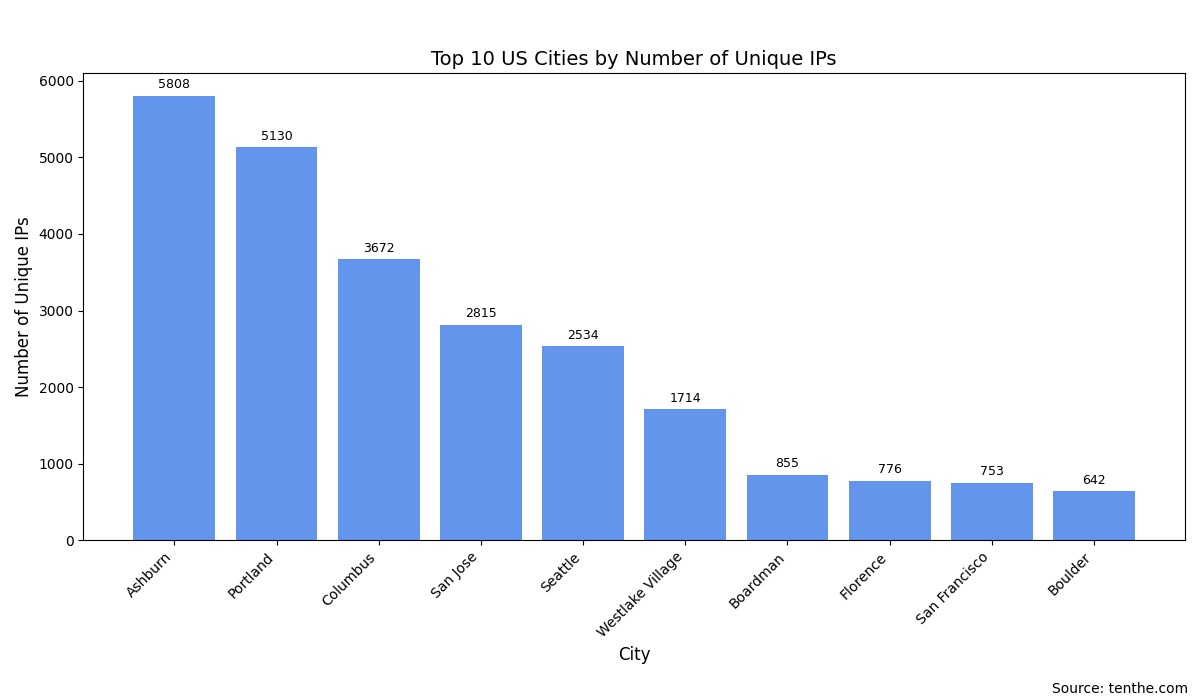

5.3 Top 10 US City Distribution

Rank | City | Unique IP Count |

|---|---|---|

1 | Ashburn | 5808 |

2 | Portland | 5130 |

3 | Columbus | 3672 |

4 | San Jose | 2815 |

5 | Seattle | 2534 |

6 | Westlake Village | 1714 |

7 | Boardman | 855 |

8 | Florence | 776 |

9 | San Francisco | 753 |

10 | Boulder | 642 |

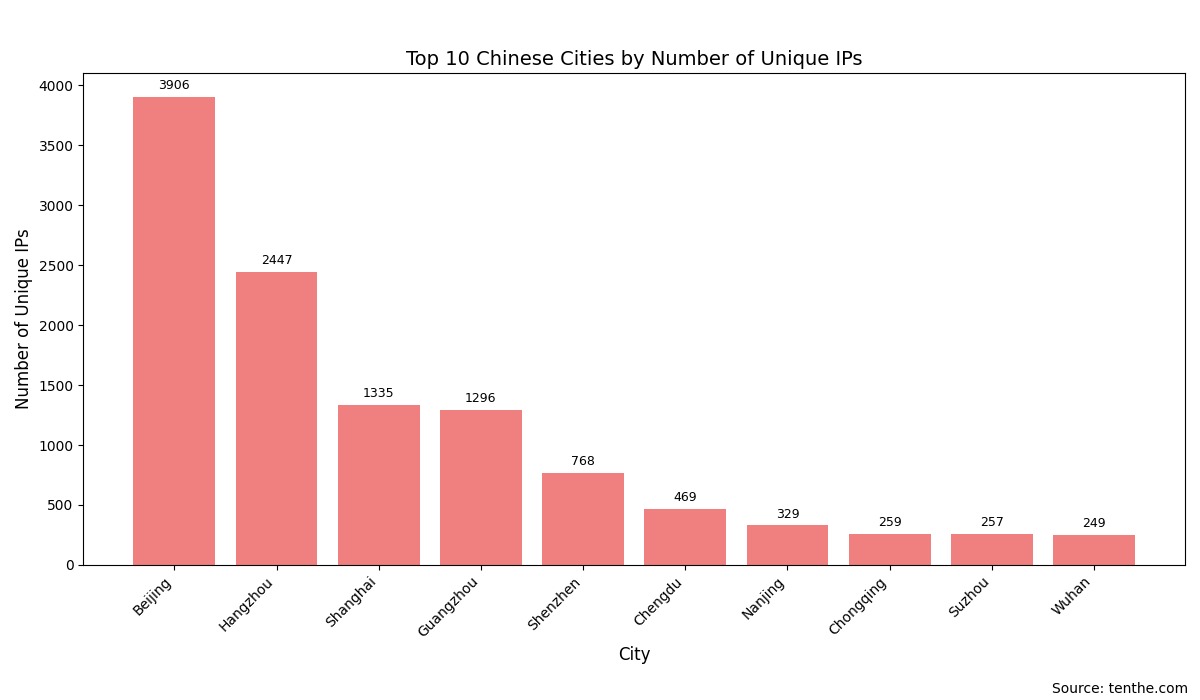

5.4 Top 10 Mainland China City Distribution

Deployments in Hong Kong and Taiwan are not reflected in the Top 10 cities table as they are already included in the country/region statistics.

Rank | City | Country ( | Unique IP Count |

|---|---|---|---|

1 | Beijing | China | 3906 |

2 | Hangzhou | China | 2447 |

3 | Shanghai | China | 1335 |

4 | Guangzhou | China | 1296 |

5 | Shenzhen | China | 768 |

6 | Chengdu | China | 469 |

7 | Nanjing | China | 329 |

8 | Chongqing | China | 259 |

9 | Suzhou | China | 257 |

10 | Wuhan | China | 249 |

5.5 US-China Top 10 City Deployment Comparison

To more intuitively compare Ollama deployments at the city level in the US and China, the table below juxtaposes the unique IP deployment counts for the top 10 cities in both countries:

Rank | US City (Top 10) | US Unique IP Count | China City (Top 10) | China Unique IP Count |

|---|---|---|---|---|

1 | Ashburn | 5808 | Beijing | 3906 |

2 | Portland | 5130 | Hangzhou | 2447 |

3 | Columbus | 3672 | Shanghai | 1335 |

4 | San Jose | 2815 | Guangzhou | 1296 |

5 | Seattle | 2534 | Shenzhen | 768 |

6 | Westlake Village | 1714 | Chengdu | 469 |

7 | Boardman | 855 | Nanjing | 329 |

8 | Florence | 776 | Chongqing | 259 |

9 | San Francisco | 753 | Suzhou | 257 |

10 | Boulder | 642 | Wuhan | 249 |

Brief Commentary:

Leading City Volume: The top 3 US cities (Ashburn, Portland, Columbus) each have over 3,000 unique IPs with Ollama deployments. China's top city (Beijing) has over 3,000 deployments, and its second city (Hangzhou) has over 2,000.

Technology and Economic Hubs: Many of the listed cities in both countries are well-known technology innovation centers or important economic regions.

Data Center Regions: The inclusion of US cities like Ashburn also reflects that Ollama instances may be largely deployed within cloud servers and data centers.

Distribution Differences: Overall, the total number of IPs in the US Top 10 cities is significantly higher than in China's Top 10 cities. However, both countries show a pattern where a few core cities account for the vast majority of Ollama deployments.

This city-level comparison further reveals that the promotion and application of Ollama, as a developer tool, are closely linked to regional technological ecosystems and industrial development.

6. Model Analysis

6.1 Brief Overview of AI Models, Parameters, and Quantization

Ollama supports a variety of open-source large language models. These models are typically distinguished by the following features:

6.1.1 Common Model Families

The current open-source community has seen a surge in excellent LLM families, each with its own characteristics:

Llama Series (Meta AI): Such as Llama 2, Llama 3, Code Llama. Known for its powerful general capabilities and extensive community support, leading to numerous fine-tuned versions. Models like

llama3.1,hermes3seen in our data are often based on the Llama architecture.Mistral Series (Mistral AI): Such as Mistral 7B, Mixtral 8x7B. Gaining attention for efficiency and high performance, especially its MoE (Mixture of Experts) models.

Gemma Series (Google): Such as Gemma 2B, Gemma 7B. Open-weight models released by Google, with technology derived from their more powerful Gemini models.

Phi Series (Microsoft): Such as Phi-2, Phi-3. Focuses on small-sized yet capable models, emphasizing "SLMs (Small Language Models)".

DeepSeek Series (DeepSeek AI): Such as DeepSeek Coder, DeepSeek LLM. Chinese AI models that excel in coding and general tasks.

Qwen Series (Alibaba Tongyi Qianwen): Such as Qwen1.5. A series of models launched by Alibaba DAMO Academy, supporting multiple languages and tasks.

There are many other excellent models, such as Yi (01.AI), Command R (Cohere), etc.

Ollama, through its Modelfile mechanism, allows users to easily use these base models or their fine-tuned versions. Model names often follow the format family:size-variant-quantization, for example, llama3:8b-instruct-q4_K_M.

6.1.2 Model Parameters (Parameter Size)

The number of model parameters (usually in B - Billion; or M - Million) is an important indicator of a model's scale and potential capability. Common parameter sizes include:

Small Models: < 7B (e.g., 1.5B, 2B, 3B). Usually run fast with low resource consumption, suitable for specific tasks or resource-constrained environments.

Medium Models: 7B, 8B, 13B. Achieve a good balance between capability and resource consumption, currently one of the most popular sizes in the community.

Large Models: 30B, 33B, 40B, 70B+. Generally more capable but also require more computational resources (RAM, VRAM) and longer inference times.

The parameter_size field in our data (e.g., "8.0B", "7B", "134.52M") indicates this.

6.1.3 Quantization Versions (Quantization Level)

Quantization is a technique to reduce model size and accelerate inference by lowering the numerical precision of model weights (e.g., from 16-bit floating-point FP16 to 4-bit integer INT4).

Common Quantization Levels: Ollama and GGUF format (used by Llama.cpp) support various quantization strategies, such as

Q2_K,Q3_K_S,Q3_K_M,Q3_K_L,Q4_0,Q4_K_M,Q5_K_M,Q6_K,Q8_0, etc.The number (e.g., 2, 3, 4, 5, 6, 8) roughly indicates the number of bits.

Kseries quantization (e.g.,Q4_K_M) are improved quantization methods introduced in llama.cpp, generally achieving better performance at the same bit count._S,_M,_Lusually denote different variants of K-quants, affecting different parts of the model.F16(FP16) represents 16-bit floating-point, often considered unquantized or a base quantization version.F32(FP32) is full precision.

Trade-off: Higher quantization (lower bit count) results in smaller, faster models, but usually comes with some performance loss (model performs worse). Users need to choose based on their hardware and model quality requirements.

The quantization_level field in our data (e.g., "Q4_K_M", "F16") indicates this.

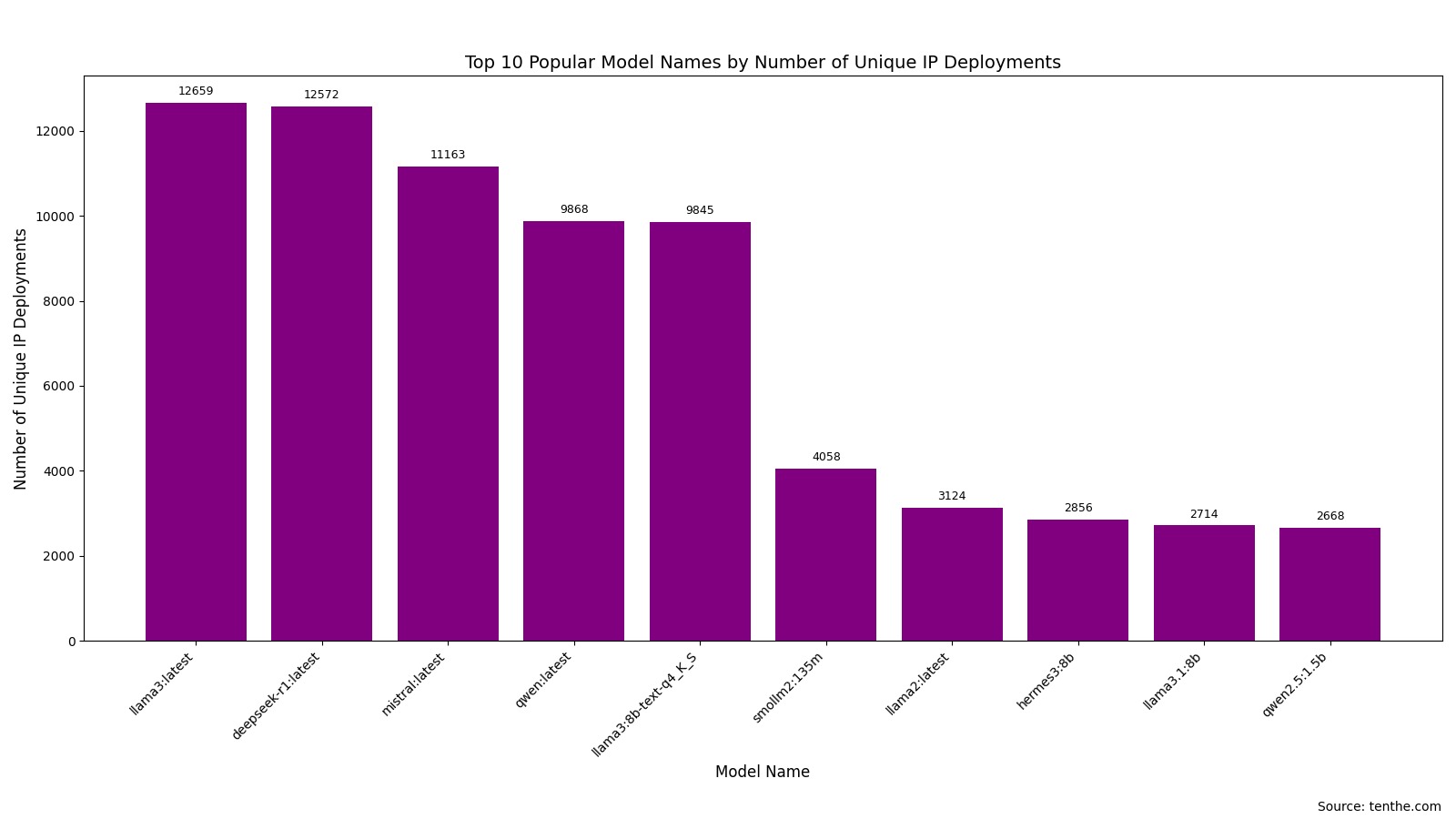

6.2 Top Popular Model Names

The table below shows the Top 10 model tags ranked by the number of unique IP deployments, including their family, parameter size, and quantization level information.

Rank | Model Name (model_name) | Unique IP Deployments | Total Deployment Instances |

|---|---|---|---|

1 |

| 12659 | 24628 |

2 |

| 12572 | 24578 |

3 |

| 11163 | 22638 |

4 |

| 9868 | 21007 |

5 |

| 9845 | 20980 |

6 |

| 4058 | 5016 |

7 |

| 3124 | 3928 |

8 |

| 2856 | 3372 |

9 |

| 2714 | 3321 |

10 |

| 2668 | 3391 |

(Note: Unique IP Deployments refers to the number of unique IP addresses that have deployed at least one instance of this model tag. Total Deployment Instances refers to the total number of times this model tag appears in the models list across all IPs. An IP might point to the same model tag multiple times through different means or records, or an IP might run multiple instances of different tags belonging to the same base model.)

Initial Observations (Popular Model Names):

Models with the

:latesttag are very common, such asllama3:latest,deepseek-r1:latest,mistral:latest,qwen:latest. This indicates many users prefer to pull the latest version of models directly.Llama series models (e.g.,

llama3:latest,llama3:8b-text-q4_K_S,llama2:latest,llama3.1:8b) occupy multiple spots, showing their strong popularity.Chinese AI models like

deepseek-r1:latest(DeepSeek series) andqwen:latest(Tongyi Qianwen series) also perform impressively, ranking high.Specific quantized versions like

llama3:8b-text-q4_K_Salso made it to the top ten, indicating user preference for specific performance/resource consumption balances.Small models like

smollm2:135mandqwen2.5:1.5balso have a considerable number of deployments, meeting the demand for lightweight models.

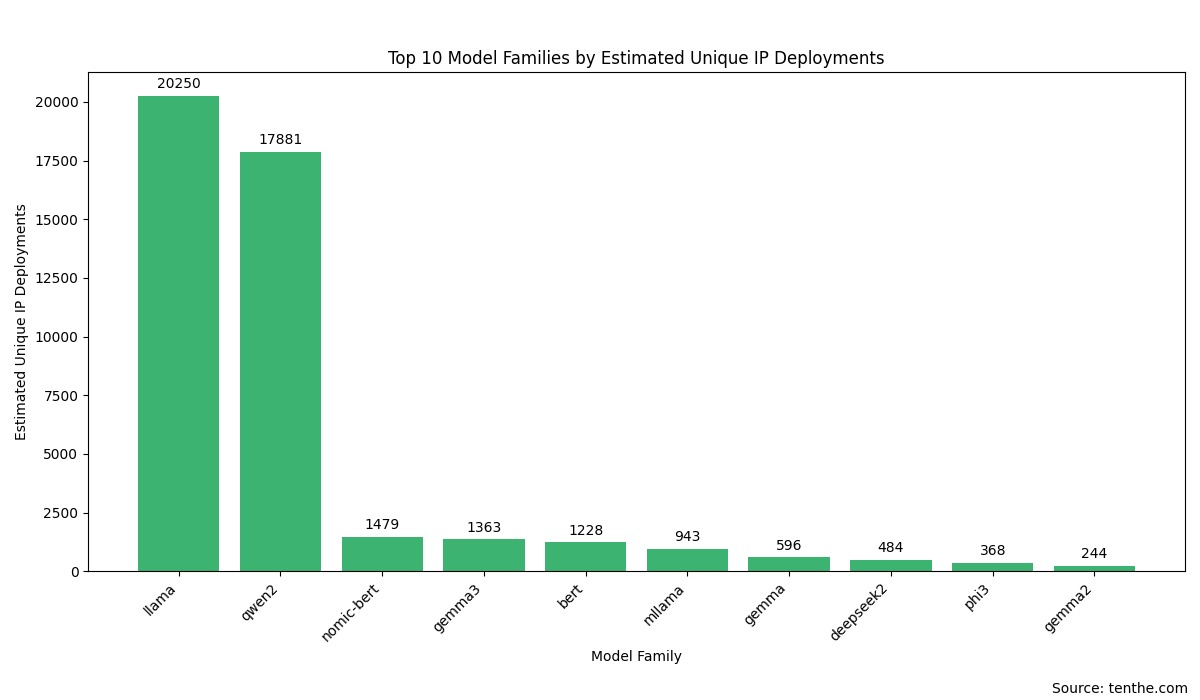

6.3 Top Model Families

Model family (details.family field) represents the base architecture or primary technological lineage of the model. Below are the model families with a higher number of deployments based on our data analysis:

Rank | Model Family (family) | Unique IP Deployments (Estimated) | Total Deployment Instances (Estimated) |

|---|---|---|---|

1 |

| ~20250 | ~103480 |

2 |

| ~17881 | ~61452 |

3 |

| ~1479 | ~1714 |

4 |

| ~1363 | ~2493 |

5 |

| ~1228 | ~2217 |

6 |

| ~943 | ~1455 |

7 |

| ~596 | ~750 |

8 |

| ~484 | ~761 |

9 |

| ~368 | ~732 |

10 |

| ~244 | ~680 |

(Note: The specific values here are estimated and summarized based on the previously queried Top 50 model details list and may slightly differ from precise global statistics, but the trend is representative.)

Initial Observations (Popular Model Families):

The

llamafamily holds an absolute dominant position, consistent with Llama series models being the foundation for many modern open-source LLMs and their own widespread application. Its vast ecosystem and numerous fine-tuned versions make it the most popular choice.qwen2(Tongyi Qianwen Qwen2 series), as the second largest family, shows its strong competitiveness in China and even globally.The appearance of

nomic-bertandbertis noteworthy. Although they are not typically considered "large language models" (conversational), but rather text embedding or other natural language processing base models, their high deployment volume suggests Ollama is also widely used for such tasks. Ollama automatically downloads a default embedding model (e.g.,nomic-embed-text) when performing certain operations (like generating embedding vectors), which is likely the main reason these families rank high.Google's

gemmaseries (includinggemma3,gemma,gemma2) also show decent adoption rates.Other well-known model families like

deepseek2andphi3also made it to the top ten.mllamamay represent a collection of various Llama-based hybrid, modified, or community-named models.

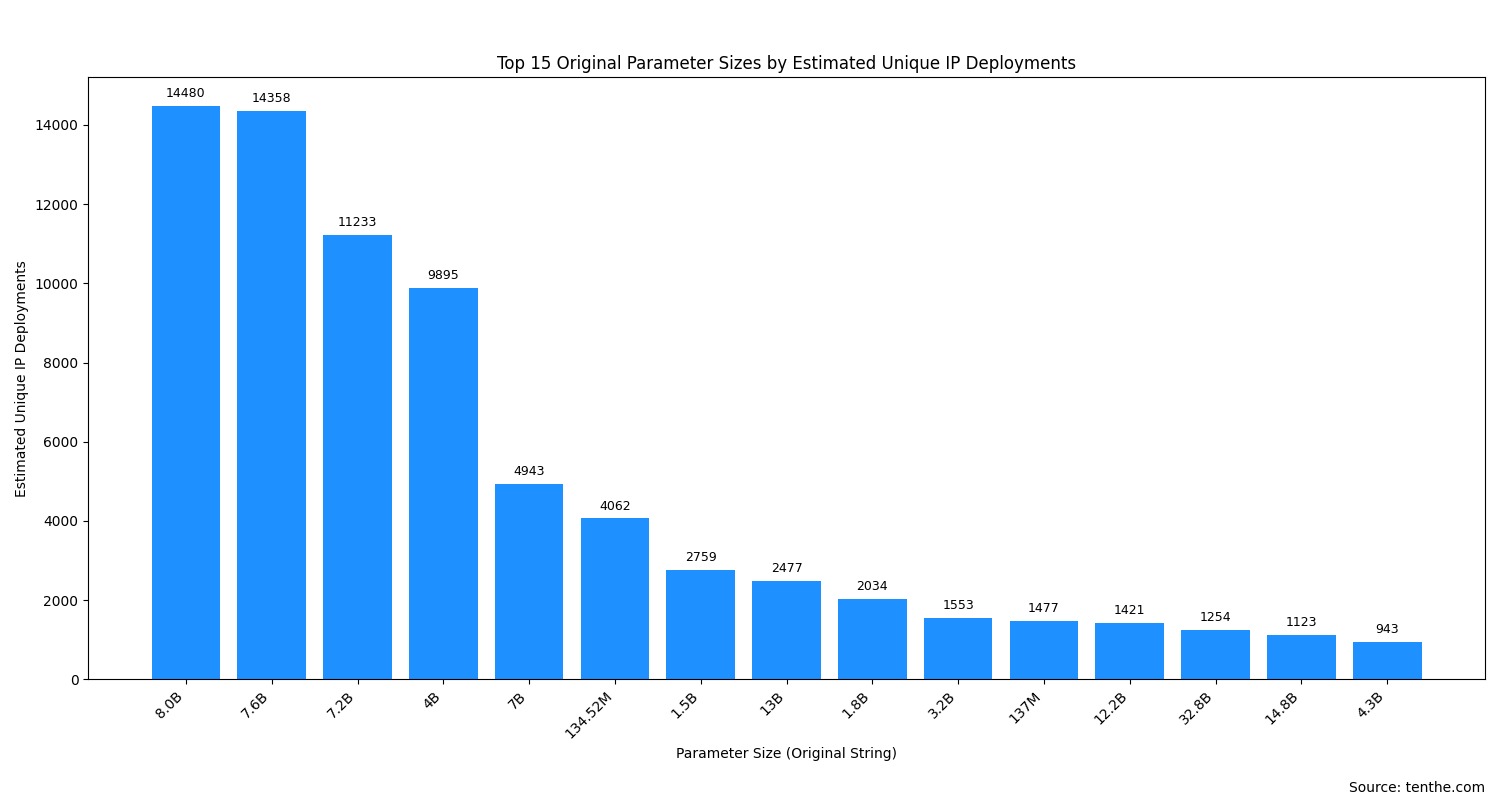

6.4 Top Original Parameter Size Statistics

Model parameter size (details.parameter_size field) is an important indicator of model scale. Due to the varied representation of parameter sizes in the raw data (e.g., "8.0B", "7B", "134.52M"), we directly count these original strings. Below are the parameter size representations with a higher number of deployments:

Rank | Parameter Size (Original String) | Unique IP Deployments (Estimated) | Total Deployment Instances (Estimated) |

|---|---|---|---|

1 |

| ~14480 | ~52577 |

2 |

| ~14358 | ~28105 |

3 |

| ~11233 | ~22907 |

4 |

| ~9895 | ~21058 |

5 |

| ~4943 | ~11738 |

6 |

| ~4062 | ~5266 |

7 |

| ~2759 | ~3596 |

8 |

| ~2477 | ~3311 |

9 |

| ~2034 | ~2476 |

10 |

| ~1553 | ~2244 |

11 |

| ~1477 | ~1708 |

12 |

| ~1421 | ~2000 |

13 |

| ~1254 | ~2840 |

14 |

| ~1123 | ~2091 |

15 |

| ~943 | ~1194 |

(Note: Values are estimated based on a summary of parameter information from the previously queried Top 50 model details list.)

Initial Observations (Popular Parameter Sizes):

Models in the 7B to 8B scale are the absolute mainstream: "8.0B", "7.6B", "7.2B", "7B" occupy the vast majority of deployments. This typically corresponds to very popular models in the community, such as Llama 2/3 7B/8B series, Mistral 7B, and their various fine-tuned versions. They strike a good balance between performance and resource consumption.

4B scale models also hold an important position: The high deployment of "4B" is noteworthy.

Million-parameter level (M) lightweight models are widespread: The high ranking of "134.52M" and "137M" is likely related to the popularity of embedding models (like

nomic-embed-text) or very small specialized models (like thesmollmseries). These models are small, fast, and suitable for resource-constrained or latency-sensitive scenarios.Stable demand for small models in the 1B-4B range: Models with parameter sizes like "1.5B", "1.8B", "3.2B", "4.3B" are also favored by a segment of users.

Large models above 10B: Such as "13B", "12.2B", "32.8B", "14.8B", while not having as many unique IP deployments as the 7-8B level, still have a considerable deployment volume, indicating a community demand for more capable models, despite their higher hardware requirements.

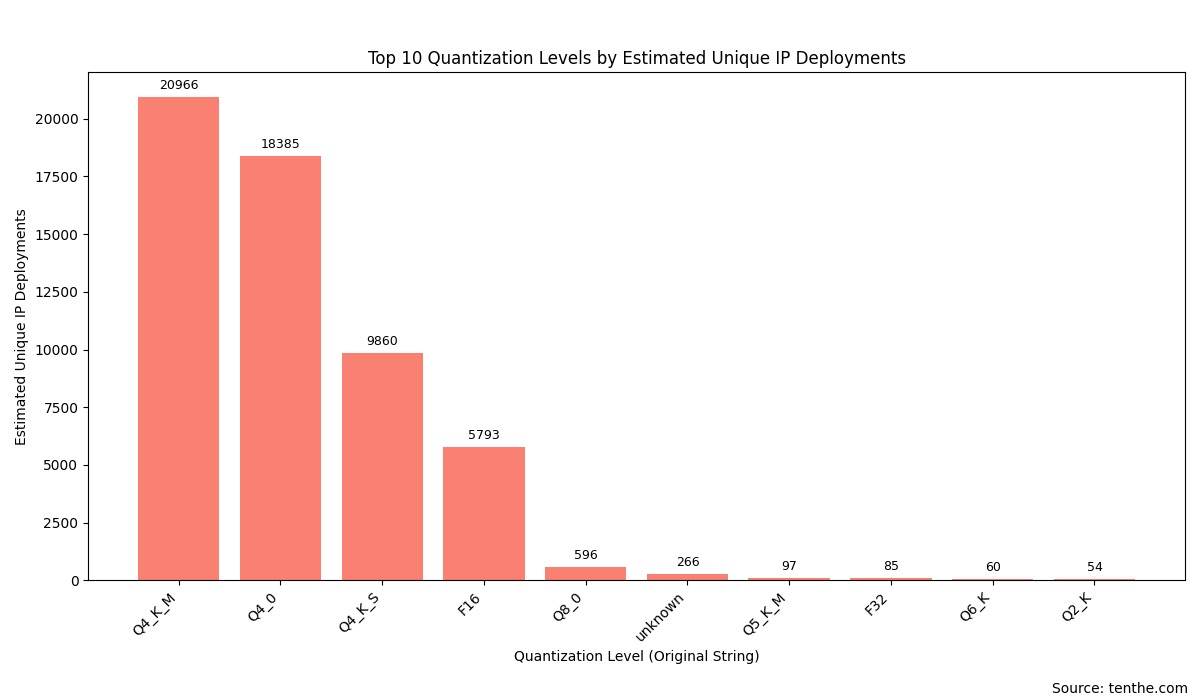

6.5 Top Quantization Level Statistics

Model quantization level (details.quantization_level field) reflects the weight precision adopted by the model to reduce size and accelerate inference. Below are the quantization levels with a higher number of deployments:

Rank | Quantization Level (Original String) | Unique IP Deployments (Estimated) | Total Deployment Instances (Estimated) |

|---|---|---|---|

1 |

| ~20966 | ~53688 |

2 |

| ~18385 | ~88653 |

3 |

| ~9860 | ~21028 |

4 |

| ~5793 | ~9837 |

5 |

| ~596 | ~1574 |

6 |

| ~266 | ~1318 |

7 |

| ~97 | ~283 |

8 |

| ~85 | ~100 |

9 |

| ~60 | ~178 |

10 |

| ~54 | ~140 |

(Note: Values are estimated based on a summary of quantization information from the previously queried Top 50 model details list.)

Initial Observations (Popular Quantization Levels):

4-bit quantization is the dominant solution:

Q4_K_M,Q4_0, andQ4_K_S, these three 4-bit quantization levels, absolutely top the charts. This clearly indicates that the community widely adopts 4-bit quantization as the preferred solution for achieving the best balance between model performance, inference speed, and resource occupation (especially VRAM).F16(16-bit floating-point) still holds a significant place: As an unquantized (or only basically quantized) version, the high deployment ofF16shows that a considerable number of users choose it to pursue the highest model fidelity or because they have sufficient hardware resources.Q8_0(8-bit quantization) as a supplement: Provides an option between 4-bit andF16.Appearance of

unknownvalues: Indicates that quantization level information is missing or non-standard in some model metadata.

6.6 Distribution of AI Computing Power (by Model Parameter Size): China vs. USA

To gain a deeper understanding of how models of different scales are deployed in major countries, we categorized and counted the parameter sizes of models deployed on Ollama instances in the United States and China. Parameter size is often considered an important indicator of model complexity and potential AI computing power demand.

Parameter Scale Classification Standard:

Small: < 1 Billion parameters (< 1B)

Medium: 1 Billion to < 10 Billion parameters (1B to < 10B)

Large: 10 Billion to < 50 Billion parameters (10B to < 50B)

Extra Large: >= 50 Billion parameters (>= 50B)

The table below shows the number of unique IPs deploying models of different parameter scales in the US and China:

Country | Parameter Scale Category | Unique IP Count |

|---|---|---|

China | Small (<1B) | 3313 |

China | Medium (1B to <10B) | 4481 |

China | Large (10B to <50B) | 1548 |

China | Extra Large (>=50B) | 280 |

United States | Small (<1B) | 1368 |

United States | Medium (1B to <10B) | 6495 |

United States | Large (10B to <50B) | 1301 |

United States | Extra Large (>=50B) | 58 |

--China-vs-USA.jpeg)

Data Insights and Analysis:

Medium-sized models are mainstream, but with different focuses:

United States: Deployments of medium-sized models (1B-10B) are absolutely dominant in the US (6495 unique IPs).

China: Medium-sized models (4481 unique IPs) are also the most deployed type in China, but the deployment of small models (<1B) in China (3313 unique IPs) is very considerable.

Significant difference in small models: China's large-scale deployment of small models may reflect a preference for edge computing, mobile AI applications, and similar scenarios.

Deployment of large and extra-large models: China shows higher activity in exploring large and extra-large models (albeit from a smaller base).

Inference on overall computing power investment: The US base in medium-sized models shows the prevalence of practical AI applications. China has an advantage in small models and is actively exploring large models.

Implications for global trends: Medium-sized models are likely popular globally. Different regions may have varying model adoption strategies based on their ecosystems and resource conditions.

By segmenting the model parameter scales in China and the US, we can see the different focuses and development potentials of Ollama applications in the two countries.

7. Network Insights

7.1 Port Usage

11434(default port): The vast majority (30,722 unique IPs) of Ollama instances run on the default port11434.Other common ports: Ports like

80(1,619 unique IPs),8080(1,571 unique IPs),443(1,339 unique IPs), etc., are also used, which may indicate that some instances are deployed behind a reverse proxy or users have customized the port.

7.2 Protocol Usage

HTTP: Approximately 65,506 unique IPs have instances serving via the HTTP protocol.

HTTPS: Approximately 43,765 unique IPs have instances serving via the HTTPS protocol.

Most instances are still exposed via unencrypted HTTP, which could pose certain security risks. (Please note: An IP might support both HTTP and HTTPS, so the sum of IP counts here may exceed the total number of unique IPs)

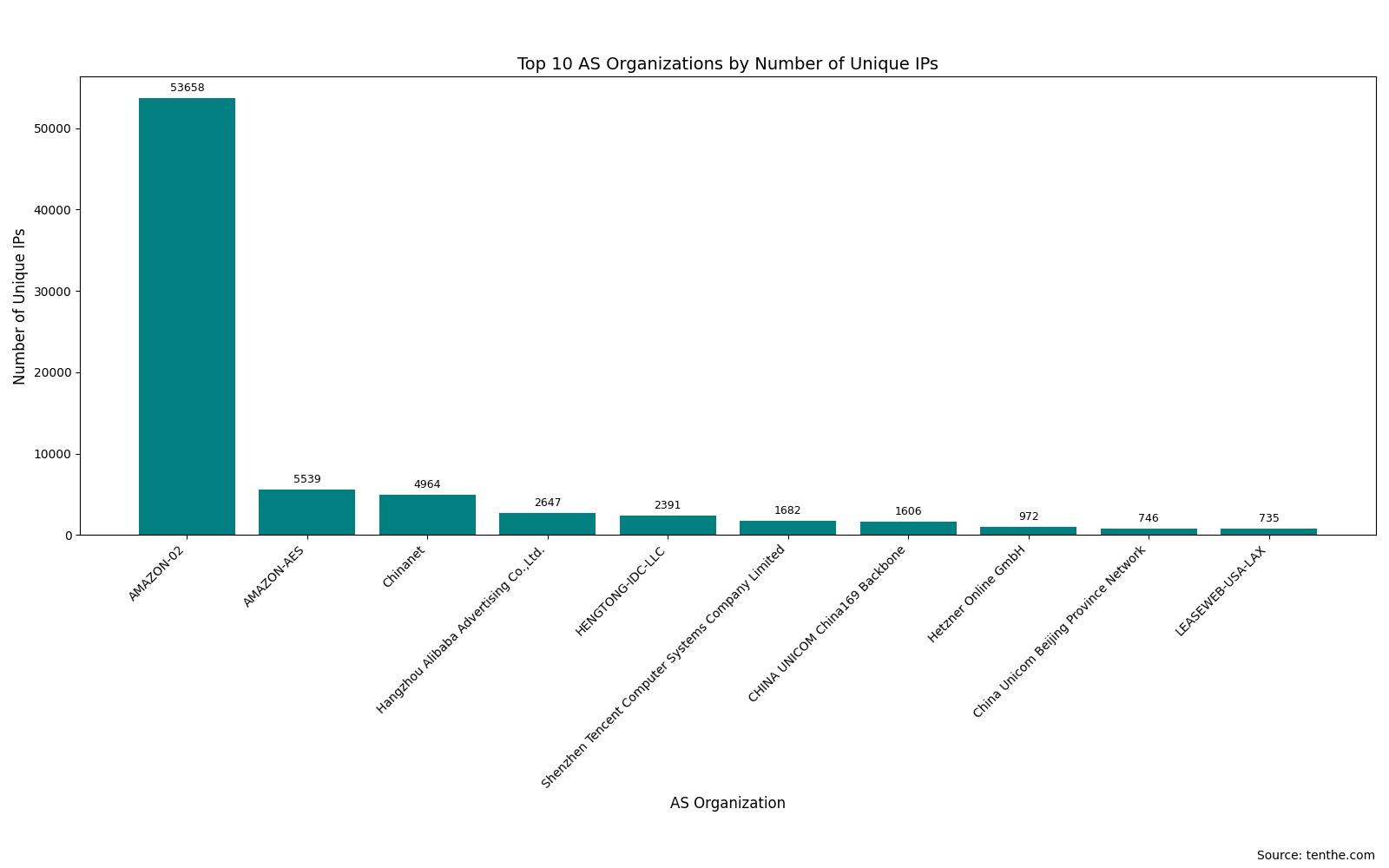

7.3 Main Hosting Providers (AS Organization)

Ollama instance hosting is highly concentrated among cloud service providers.

Rank | AS Organization | Unique IP Count | Main Associated Provider |

|---|---|---|---|

1 | AMAZON-02 | 53658 | AWS |

2 | AMAZON-AES | 5539 | AWS |

3 | Chinanet | 4964 | China Telecom |

4 | Hangzhou Alibaba Advertising Co.,Ltd. | 2647 | Alibaba Cloud |

5 | HENGTONG-IDC-LLC | 2391 | Hosting Provider |

6 | Shenzhen Tencent Computer Systems Company Limited | 1682 | Tencent Cloud |

7 | CHINA UNICOM China169 Backbone | 1606 | China Unicom |

8 | Hetzner Online GmbH | 972 | Hetzner |

9 | China Unicom Beijing Province Network | 746 | China Unicom (Beijing) |

10 | LEASEWEB-USA-LAX | 735 | Leaseweb |

AWS (AMAZON-02, AMAZON-AES) holds the largest share, followed by major Chinese telecom operators and cloud service providers (like Alibaba Cloud, Tencent Cloud). Other hosting providers like Hetzner and Leaseweb also have significant shares.

8. Security and Other Observations

Version Information: For security reasons, we have not listed statistics on Ollama versions.

HTTP Exposure Risk: As mentioned earlier, a large number of Ollama instances are exposed via HTTP without TLS encryption, which can make communication content (e.g., interactions with models) vulnerable to eavesdropping or tampering. Users are advised to configure a reverse proxy and enable HTTPS.

API Accessibility: The data in this report is based on Ollama instances whose

/api/tagsinterface is publicly accessible. The actual number of deployments may be higher, but some instances might be deployed in private networks or have external access restricted by firewalls.

9. Conclusion and Brief Review

This report, by analyzing data from 99,412 globally publicly accessible Ollama instances (via their /api/tags interface), draws the following main conclusions and observations:

1. Global Deployment Overview and Geographical Distribution:

Ollama, as a convenient tool for running large models locally, has been widely deployed globally. This analysis identified 99,412 publicly accessible unique IPs.

High Geographical Concentration: The United States and China are the two countries/regions with the most concentrated Ollama deployments, collectively accounting for a significant portion of the total accessible instances (US 29,195, China 16,464). Countries like Japan, Germany, the UK, India, and Singapore also have notable deployment numbers.

City Hotspots: In the US, cities like Ashburn, Portland, and Columbus lead in deployments; in China, technologically advanced cities such as Beijing, Hangzhou, Shanghai, and Guangzhou are the main deployment locations. This is often related to the concentration of tech companies, data centers, and developer communities.

2. AI Model Deployment Trends:

Popular Model Tags: General latest tags like

llama3:latest,deepseek-r1:latest,mistral:latest,qwen:latestare most popular. Specifically optimized versions likellama3:8b-text-q4_K_Sare also favored for their good balance.Dominant Model Families: The

llamafamily leads by an absolute margin, followed byqwen2. The high ranking of embedding model families likenomic-bertandbertis noteworthy, possibly related to Ollama's default behavior.Parameter Size Preferences: Models with 7B-8B parameters are currently the mainstream. Lightweight models at the million-parameter level and large models above 10B also have their respective markets. A US-China comparison shows the US deploys more medium-sized models, while China is more active in exploring small and extra-large models.

Quantization Level Choices: 4-bit quantization (especially

Q4_K_MandQ4_0) is the overwhelming choice.F16, as a higher-fidelity option, also holds an important position.Metadata Complexity: Analysis of model metadata (e.g., interpreting the model family field) sometimes reveals complexities between its records and model names or common understanding, highlighting the diversity of metadata management in the open-source ecosystem.

3. Technical Infrastructure:

Hosting Environments: A large number of Ollama instances are hosted on major cloud service providers like AWS, Alibaba Cloud, Tencent Cloud, as well as in the networks of major national telecom operators.

Service Ports: Ollama's default port

11434is the most commonly used, but a considerable number of instances are also exposed via standard web ports.

4. Objective Assessment:

Popularity of Ollama: The data clearly shows Ollama's high adoption rate among developers and AI enthusiasts worldwide.

Vibrancy of the Open-Source Ecosystem: The diversity of popular models and the widespread use of various parameter and quantization versions reflect the booming development of the open-source AI model ecosystem.

Balance in User Preferences: When choosing models, users tend to seek a balance between model capability, operational efficiency, and hardware costs.

Security and Openness: A large number of instances allow public access to their model lists, which, while convenient for the community, may also pose security risks.

5. Future Outlook:

With the emergence of more efficient, smaller models and further advancements in quantization technology, the deployment barrier for Ollama is expected to continue to decrease.

The standardization of model metadata and community-shared models is crucial for enhancing the transparency and usability of the ecosystem.

In summary, Ollama is becoming an important bridge connecting cutting-edge large language models with a broad range of developers, researchers, and even general users. This data analysis provides valuable insights for understanding its current global deployment status and user preferences.

Comments

comments.comments (0)

Please login first

Sign in